Engineer RAG Learning

An AI-assisted learning platform for engineering students, designed to position AI as a process coach rather than an answer engine—transforming student problem-solving activities into actionable insights for instructors.

Background 📖

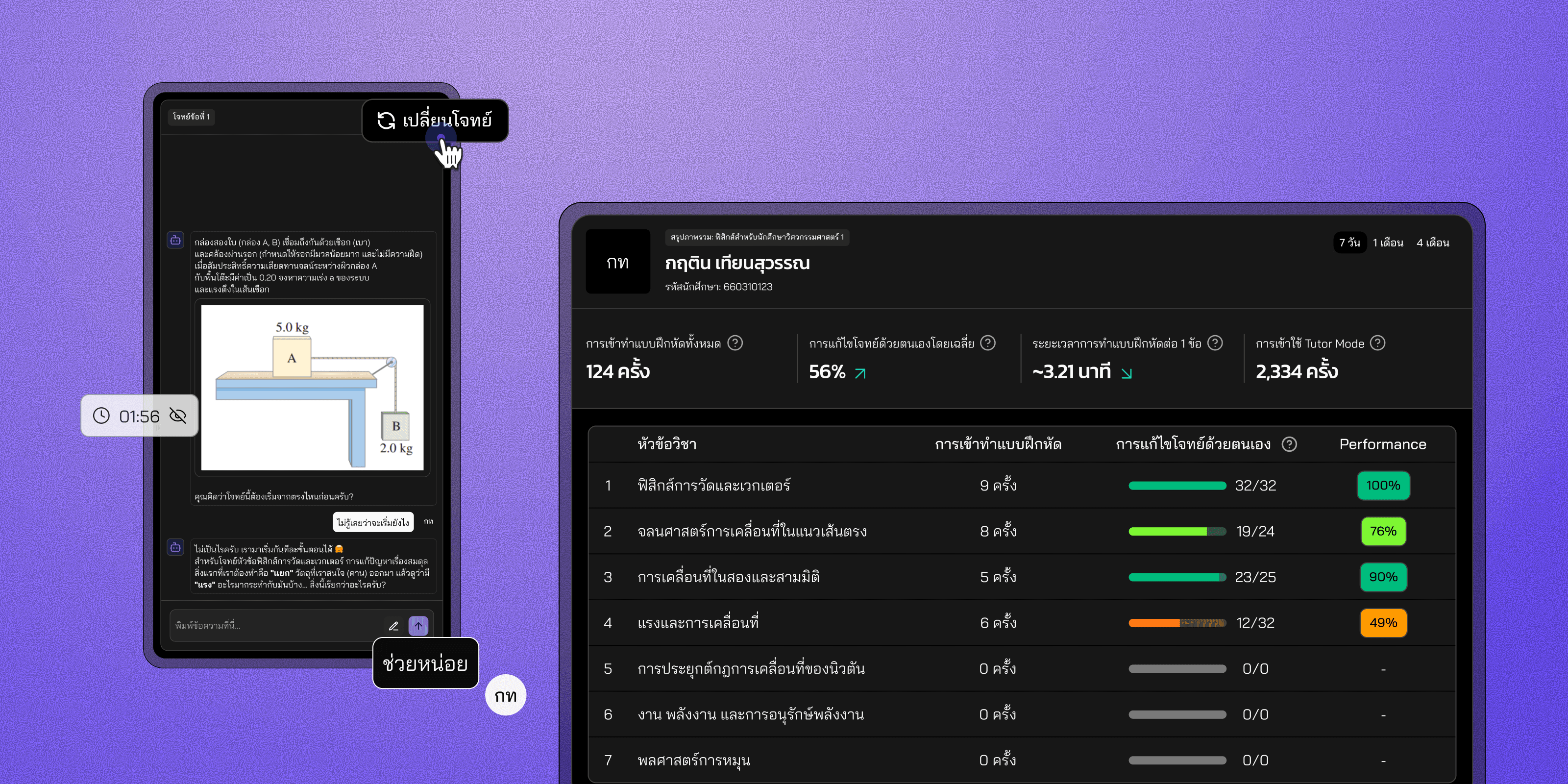

In engineering education, most instructors often assess students based on final answers, with limited visibility into how those answers are formed—whether students misunderstand concepts, logic, or struggle with application.

The core problem is not students’ ability to learn, but instructors could not be visible into students’ thinking processes.

Role & Responsibility 🎩

UX/UI Designer, Responsibility:

Information Architecture: Designed system structure for 3 roles:

Student flow,Instructor dashboard,Admin configUX Flow: Step-by-step exercise flows with AI-student interaction logic, CMS management for Instructor User management and System configuration for Admin.

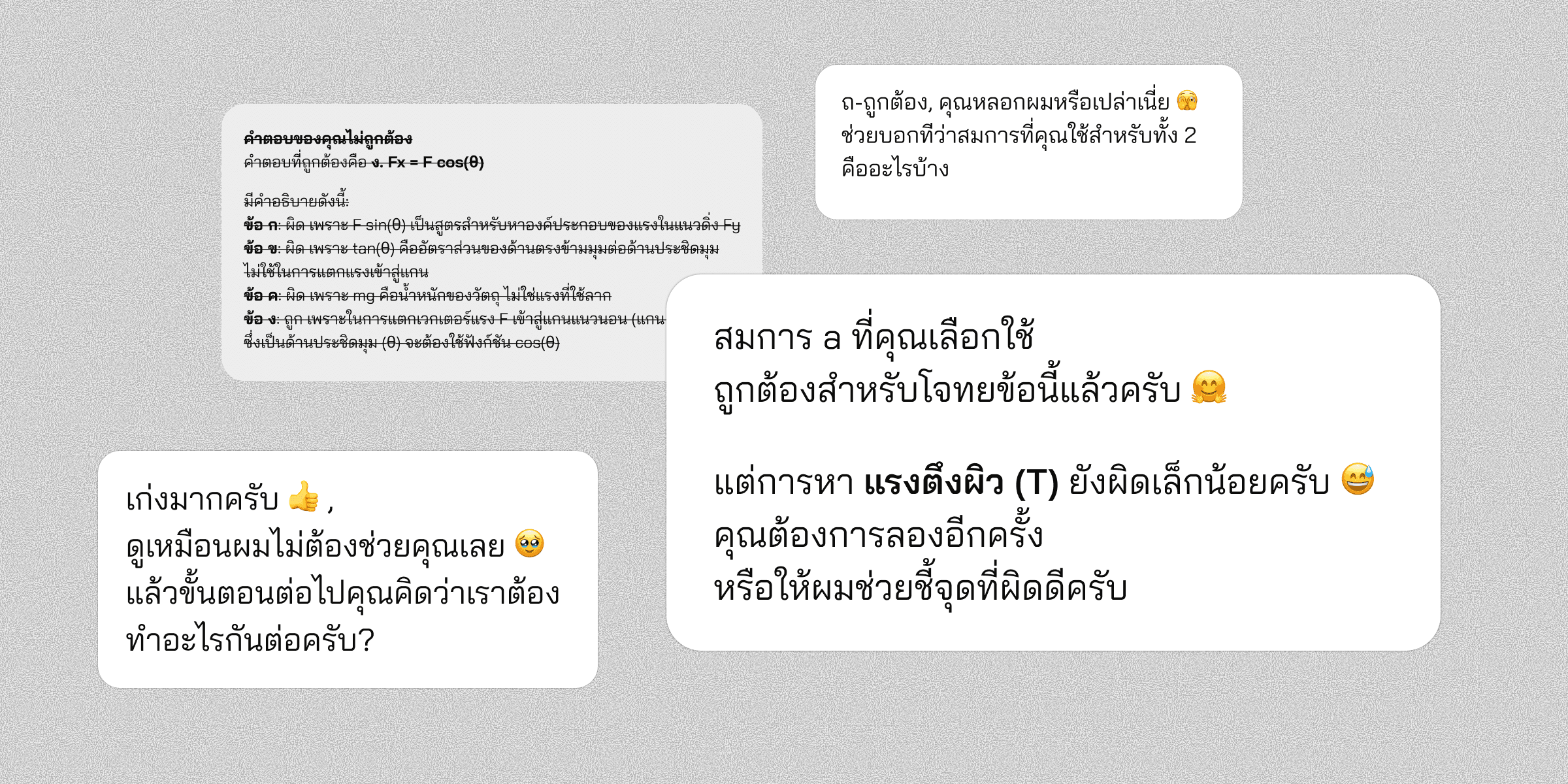

AI Conversation Design: Encouraging, supportive tone; hint/final answer delivery; polite/friendly pronouns

UI Design: Interface layouts and visuals

Goal 🎯

Goals (Instructor-focused)

Capture process-level learning signals (e.g., repeated mistakes, hint usage)

Differentiate types of errors (logic vs concept vs calculation)

Goals (Student-focused)

Encourage step-by-step reasoning rather than answer memorization

Provide low-stress practice environment where students feel safe to explore and make mistakes, while the system passively captures learning signals

Constraints & Design Considerations 💬

Open LLM reliability: must restrict domain to maintain accuracy and token usage

Learning outcomes can’t be judged by final answer alone; the system must capture and interpret students’ learning behaviours (e.g. repeated mistakes, time spent, hint usage) as measurable signals.

Key Design Decisions 🗝️

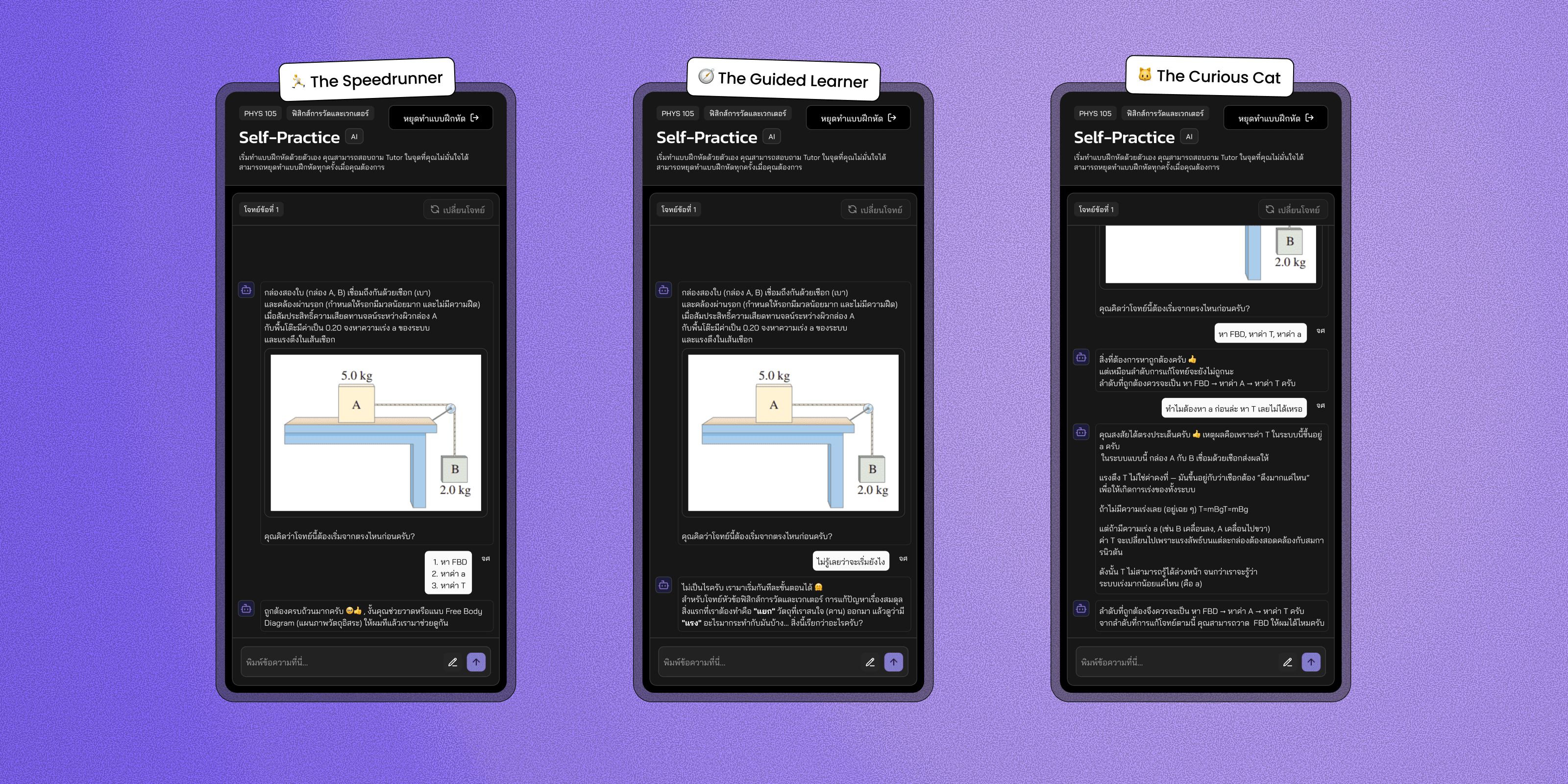

Decision 1: AI Tutor tone instead of quiz-style Q&A

📝 Context :

The initial idea proposed by the AI team was a multiple-choice Q&A flow with instant answers. However, instructors could not observe how students reasoned, and learning progress was difficult to measure due to guessing.

🏁 Decision : The AI was redesigned as a tutor rather than an evaluator

Open-ended questions instead of multiple choice

Hints instead of immediate answers

Follow-up questions to prompt explanation of reasoning

Decision 2: Flexible interaction flow with goal recovery

📝 Context : Students interacted with the system in many ways:

skipping steps, providing only final answers, getting stuck, or asking unrelated questions.

A rigid flow caused users to drift away from learning objectives.

🏁 Decision : The AI was designed to guide users back into the intended learning flow

Clear expectations for step-by-step explanations

Asking users to explain their reasoning when final answers were given

Gradual hints when users were stuck

Decision 3: Measuring learning behaviour, not just outcomes

📝 Context : Capturing only final answers was insufficient to evaluate learning progress.

🏁 Decision : Additional behavioural signals were recorded, such as:

Time spent per question

Hint requests

Repeated mistakes within the same concept

🚧 Trade-offs & Limitations

Added 1-minute delay before replacing repeated problems to reduce token usage.

Why: token cost & latency constraints with open LLM

Impact: slight delay but preserves reliable learning behaviour signals

Expected Outcomes & Signals 🔮

Expected Outcomes :

Student: engage more in step-wise practice learning.

Instructor: Insight into individual/student group performance , Identify the areas needing intervention

Success Signals :

Decrease in repeated mistakes across sessions

Reduction in hint drop-off rates

Improved completion rates and lower dropout points